Daniel Fine

Live Performance in Shared Virtual Worlds

Virtual Reality & Live Performance

Co-Principal Investigator, Artistic Director, Co-Creator, Digital Media & Experience Design

Role:

Peer-Reviewed Outcomes/Dissemination:

December 2022

Piece of Me

Distributed Online Performance

Community Partners Dream City

University of Iowa

Motion Capture & VR Studio

May 2022

Piece of Me

Workshop performances for invited audiences

University of Iowa

Motion Capture & VR Studio

February 4-12, 2020

Elevator #7

Invited Performance and Residency

Advanced Computing Center for Arts and Design

The Ohio State University

Columbus, Ohio

December 2018

Elevator #7

Performance: Mabie Theatre,

University of Iowa, Iowa City, IA

March, 2021

Invited Speaker, Conference Presentation

Virtual Performance Series: 3D Environments:

Motion Capture and Avatars

USITT 21 Virtual Conference and Stage Expo

June 16, 2020

Invited Solo Speaker, Conference Presentation

Immersive & Mixed Reality Scenography: Designing Virtual Spaces

AVIXA at InfoComm: The Largest Professional Audiovisual Trade Show in North America

Las Vegas, Nevada

Cancelled due to Covid

April 4, 2020:

Speaker on panel Immersive & Mixed Reality Scenography: Designing Virtual Spaces.

USITT 20 Conference & Stage Expo

Columbus, Oh

Cancelled due to Covid

November 2019

Invited Solo Speaker: VR and Live Performance

LDI Tradeshow and Conference

Las Vegas, Nevada

June 9, 2019

Photo Gallery:

The total amount of grants received to date is $202,000.00.

Major grants of note include:

-

-

- $150,000 UI OVPR Jumpstarting Grant.

- Two rounds of funding from UI OVPR (Office of the Vice President for Research) Creative Matches grant totaling $23,000.00.

-

Piece of Me

Performer in mocap suit with facial capture (iPhone mount).

Piece of Me

Performer controlling Owl avatar. TV shows what audience sees in VR headsets.

Piece of Me

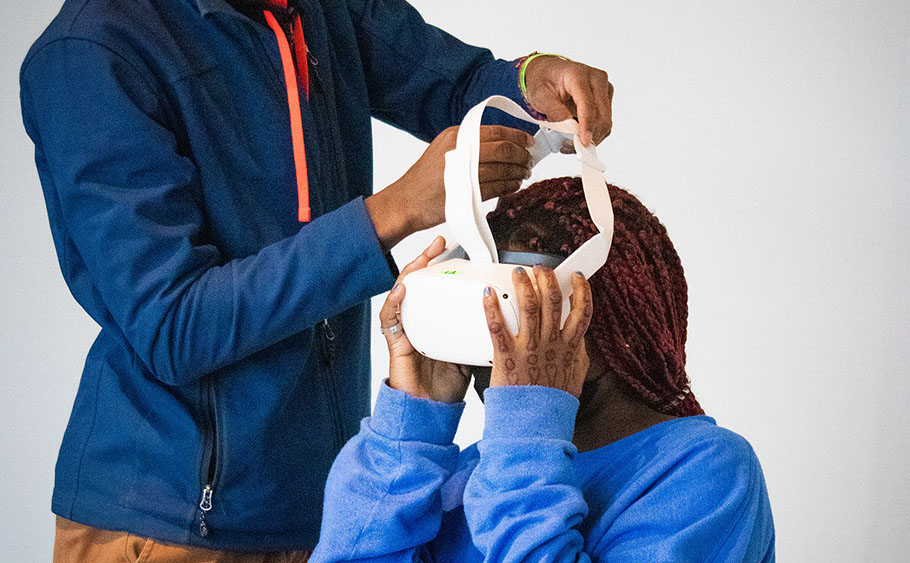

Student team member helps audience put on HMD.

Piece of Me

Left: Audience members dancing with: Right: Performer in mocap suit dancing with audience.

Avatar and virtual worl that audience sees on screen behind performer)

Piece of Me

Stage Manager in the foreground, director in the background.

Composite of the actors viewed by the player (in physical form L) and a display showing the actor in VR (right).

Iowa & OSU Performance of Elevator #7

Haptic feedback: A user stands next to a fire in the virtual world while a space heater blows hot air on her in the real world in Iowa Performance of Elevator #7.

A user looks down into a hole in the floor in the virtual world to see a live performer below him. Performer is on a greenscreen in the background. Iowa Performance of Elevator #7.

Live performer on a greenscreen. Physical camera is mounted above. Videostream is on a plane below the floor in the virtual world. Iowa Performance of Elevator #7.

Left: A downlooking video camera captured Casandra. Middle: Player looking down at Casandra. Right: Composited 2D video stream in a hole in the virtual environment from the player’s view. Iowa Performance of Elevator #7.

A user talks to the performer in Iowa Performance of Elevator #7.

User sees the live performer composited into the virtual world in Iowa Performance of Elevator #7.

User talks to a live performer in Iowa Performance of Elevator #7.

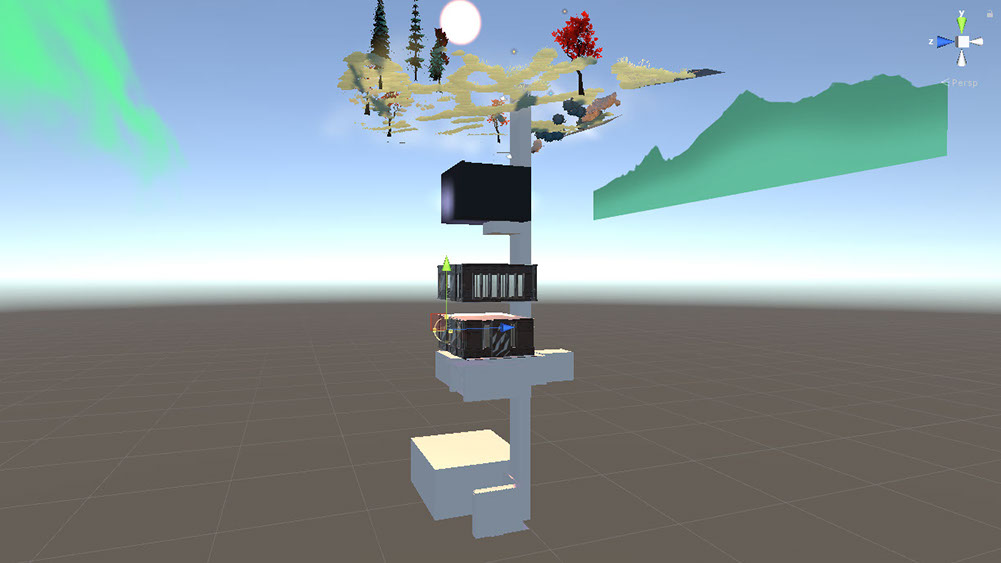

Rendering of the 3D model (built with 3Ds Max) inside Unity for Iowa Performance of Elevator #7.

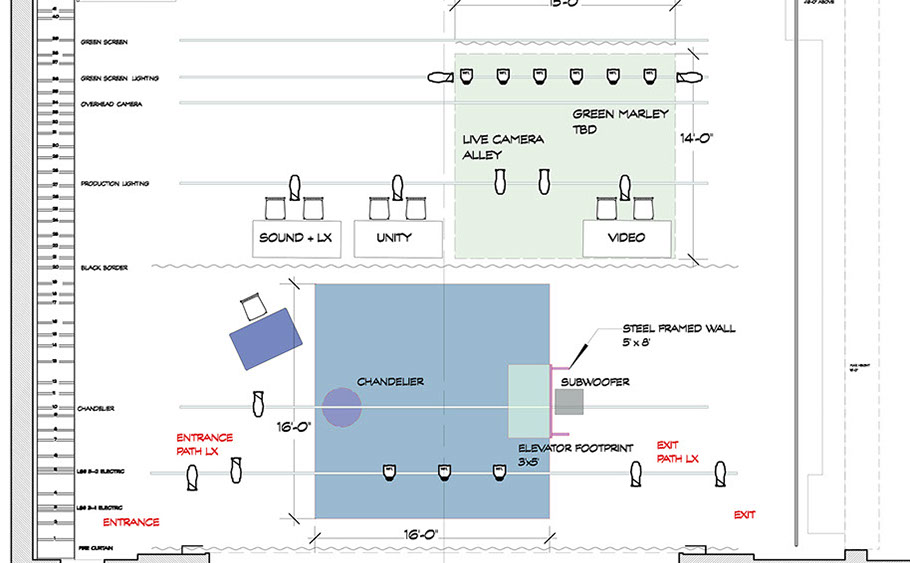

Ground plan of stage for audience experience for Iowa Performance of Elevator #7.

Audience watches a user at OSU Performance of Elevator #7.

Haptic feedback of real flowers falling on a user as the user sees flower falling in the headset. OSU Performance of Elevator #7.

OSU Performance of Elevator #7.

Live audience at OSU Performance of Elevator #7.

User puts on trackable boots (via a Vive puck) at OSU Performance of Elevator #7. User sees digital boots in the headset.

Iowa and Ohio team for OSU Performance of Elevator #7.

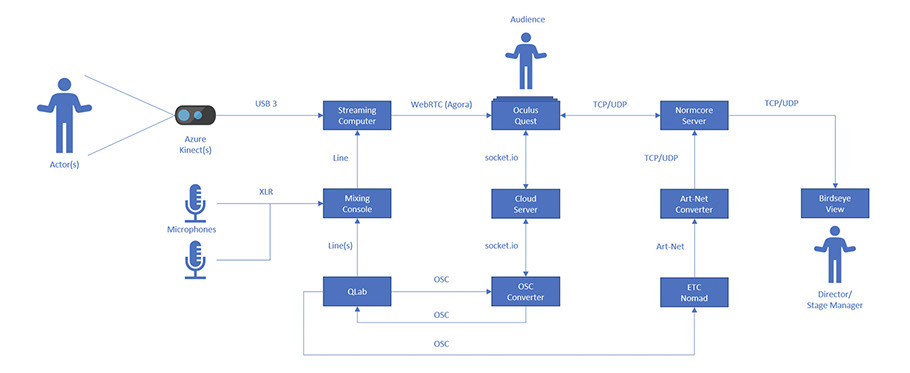

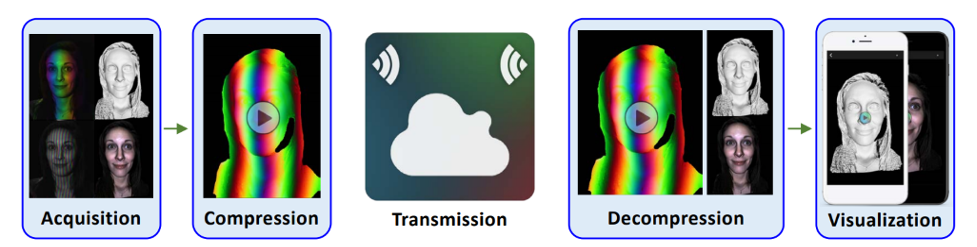

System diagram to enable one-to-many 3D video streaming of actors and multiple, remote audience members.

Pipeline for 3D video streaming for distributed VR. The Acquisition Module captures/produces 3D range data/color texture in real-time. Compression Module encodes depth/color data into a standard 2D video codec that is transmitted within a low-latency video stream via WebRTC. On the remote device, the Decompression Module decodes depth and color data to reproduce the 3D range data. Finally, the Visualization Module allows the received 3D video data to be displayed and interacted with in real-time.

Example data frame captured from the Microsoft Azure Kinect (DK). Left: Color texture image; Middle: Depth map with distance-based threshold applied; Right: Colored 3D reconstruction of the depth map with a point-based “hologram” special effect applied in the rendering.

For distributed VR.

1 - 24

<

>

Videos:

Highlight video by UI Iowa Now

Performance of Elevator #7

Creative Team:

Faculty

Joseph Kearney: co-creator, virtual reality technical director

Daniel Fine: co-creator, artistic director, director, live video

Tyler Bell: co-creator, depth image and Unity development

Alan MacVey: co-creator, writer, director

Bryon Winn: co-creator, lighting & sound design

Monica Correia: co-creator, 3-D design

Joseph Osheroff: primary performer

Students (Former and Current)

Emily, BS Informatics (paid ICRU position)

Brillian, BA Theatre Arts (paid ICRU position)

Travis, BS Engineering (paid ICRU position & consultant fees)

Jacob, BS Engineering (paid ICRU position)

Joe, BFA 3D Design (paid ICRU position)

Runqi Zhao, Computer Science (paid ICRU position)

Yucheng Guo, BFA 3D Design (paid ICRU position)

Ted Brown, BA Theatre Arts (paid hourly via Creative Matches grant)

Marc Macaranas, MFA Dance (paid hourly via Creative Matches grant)

Sarah Gutowski, MFA 3D Design (paid hourly via Creative Matches grant)

Xiao Song, BS Computer Science (paid hourly via Creative Matches grant)

Ryan McElroy, BA Theatre Arts (paid hourly via Creative Matches grant)

Chelsea Regan, MFA Costume Design (paid hourly via Creative Matches grant)

Ashlynn Dale, BA Theatre Arts (paid stipend via Creative Matches grant)

Octavius Lanier, MFA Acting (paid stipend via Creative Matches grant)

Chris Walbert, BA Theatre Arts (paid stipend via Creative Matches grant)

Shelby Zukin, BA Theatre Arts (paid stipend via Creative Matches grant)

Derek Donnellan, BA Theatre Arts (paid stipend via Creative Matches grant)

Courtney Gaston, MFA Design

Nick Coso, MFA Design

About:

A multi-year research project of faculty and students at the University of Iowa departments of Theatre Arts, Dance, Computer Science, Art & Art History, and Engineering that explores the emerging collision of live performance, 3D design, computer science, expanded cinema, immersive environments, and virtual worlds by creating live performances in shared virtual and physical worlds. This research develops new ways to create immersive theatrical experiences in which multiple, co-physically present and remotely-located audience members engage with live actors through 3D video streams in virtual and hybrid physical/digital environments. To achieve this, the team devises original creative experiences, develops robust, real-time depth compression algorithms and advanced methods to integrate live 3D video and audio streams into spatially distributed, consumer VR systems.

In addition, the research integrates live-event technology, used in professional theatrical productions, to interactively control lighting, sound, and video in virtual reality software and technology. This empowers VR designers to create rich, dynamic environments in which lighting, sound, and video cues can be quickly and easily finetuned during the design process, and then interactively adjusted during performances. By targeting commodity-level VR devices, theatrical productions would be available to those who live in remote locations or are place-bound; to those with restricted mobility; and to those who may be socially isolated for health and safety reasons. More broadly, this research explores ways to advance remote communication and natural interaction through the use of live 3D video within shared virtual spaces (i.e., telepresence). Such advances have potential for great impact on distance training and education, distributed therapy, and tele-medicine; areas whose needs have been accelerated by the current pandemic.

Read a full history of the project here.

Press:

"The project represents the next generation of immersive theater, building on works such as the site-specific, interactive theatrical production Sleep No More, by Punchdrunk Theater Company in New York City, and mixed-reality performances that have premiered at film festivals around the world." Link to: Iowa Now Article

copyright 2024